Exploration: Augmented Reality for Musicians

Transforming passive consumption into personal expression

That singer’s reality just got augmented!

Exploring Responsive Audio Augmented Reality — RAAR!

Audio is the neglected realm of augmented reality. Yet, it offers experiences that go beyond simply including audio in the visual elements of more visually-based AR. Audio is personal, internal, emotional, and can be nearly identical to real-world listening experiences, especially if that experience responds to and synchronizes with the user’s own actions.

I explored this idea with this app prototype, in which a singer begins singing “Part of Your World” from The Little Mermaid. The app hears it and augments her reality—the here and now—with a real orchestra recording that fits her singing perfectly, waiting for her to reach certain points in the melody, anticipating the timing so the AR orchestra is right in sync with her. The computation is light, but the impact is dramatic and personal.

“It’s reading your mind!”

That sums up how RAAR actually feels. No one expects recorded music to listen to them and to anticipate that timing of their singing so it can be perfectly in sync with them. But the feeling of having your mind read is exactly what happened when singers tried our AR prototype. The potential of responsive audio augmented reality is just waiting to be explored.

Responsive AR Prototype

What if a movie could listen to you sing, and match your expression in real time?

In this video Frances is watching The Little Mermaid on her iPad. When she sings “Part of Your World,” the movie listens to her and fits the movie, including its sound effects to her singing. Near the end the timing changes when Frances decides to sing it again, but faster.

The Little Mermaid

Imagine watching an animated Disney classic, like The Little Mermaid. But this time, when your favorite song comes along, just sing it and the entire movie, including the audio, magically fits your voice, your expression, your timing. Any media can be driven by RAAR, including video, 360° video, audio, MIDI (including stage lighting, set motions, etc.).

Now, instead of a viewer driving the experience as a “sing along,” imagine a film production setting. Multiple takes of the solo singer can vary as the artist pleases. The audio editor can disregard any timing differences and simply choose the best take. RAAR will instantly fit the best solo track with the backing tracks, even if their original timing is not the same. And it will do it automatically. So, this approach brings immense creative and time-saving benefits to media production workflows.

This prototype was built on the core AI audio engine we created at Sonation to predict a user’s audio timing in real time to then synchronize pre-recorded media to their performance. While the initial application of this technology focused on the demanding timing synchronization requirements of fluid classical music performance, I developed and tested new applications for media experiences and production.

Motion-responsive AR Prototype

Dance-driven Music Playback

Dance-based augmented reality

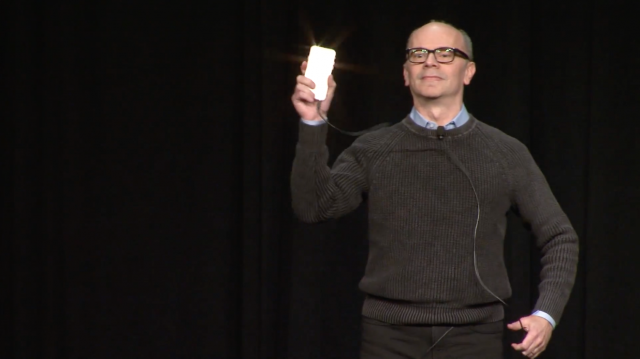

Not only can audio be transformed by your real-time singing or playing an instrument, but we can also use dance, running, working out, or even conducting to fit music to your own individual expression in the moment. This video shows an early prototype demo at the AR in Action conference at MIT in 2017.